Sections

Left Column

Right Column

Text Area

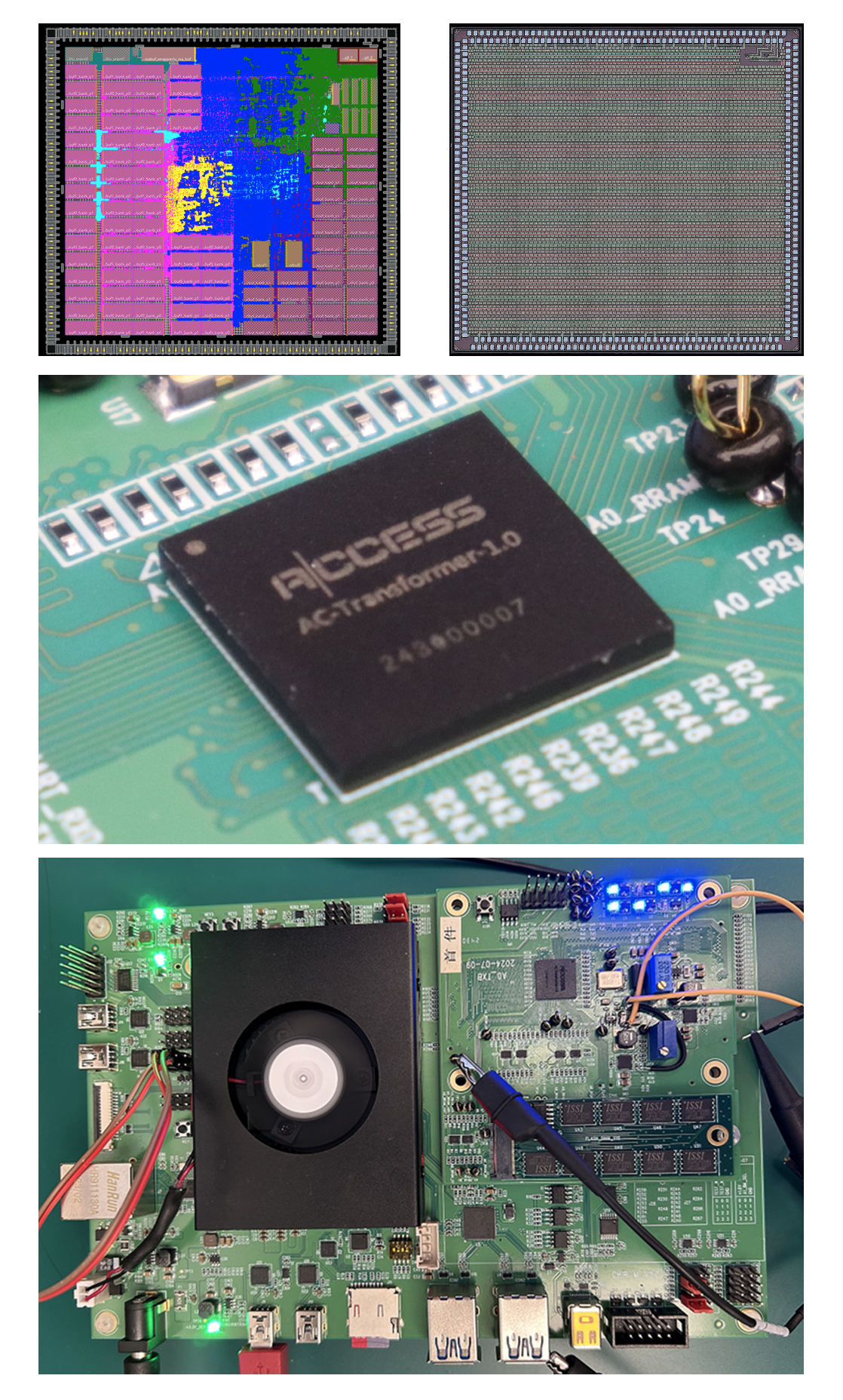

AC-Transformer: High Power-Efficiency AI Chip

Text Area

Technology Edges

- Based on algorithm-hardware co-design strategy and leveraging our self-developed AI chip design automation toolset, create high-efficiency AI chips

- Natively support both Transformer and CNN architectures, enabling multimodal AI computing capabilities, and flexible scalability from single-chip system to multi-chip clusters

- Through breakthrough innovations, significantly improved the energy efficiency of large model inference and successfully published our work at ISSCC 2025

Text Area

Chip Highlights

- By pioneering seamless support for sparse computation in both attention and convolution operations, we achieved a 300% improvement in computational efficiency

- Specific hardware architectures designed for nonlinear operators, perfectly supporting 18 types of complex computations such as Softmax, LayerNorm, and GeLU

- Intelligent memory scheduling strategy reduces storage pressure by 60% through layer fusion and operator fission

Text Area

Chip Specifications

| Process node | TSMC 28nm HPC+ |

| Metal stack | 1p9m_6X1Z1U |

| Voltage | I/O: 1.8V Core: 0.65v ~ 1.0v |

| Data Precision | INT8 |

| Process clock | 200 - 625MHz |

| Peak performance | 2.56TOPS/s |

| Logic gates | 6M |

| SRAM Size | 3.28MByte |

| Hard IP | PLL |

| Soft IP | ARM M0 |

| PAD # | 384 |

| Clock domain | 4 |

| Power (with full NN payload) | MIN: 0.16W @0.65v, 200MHz TYP: 0.75W @0.9v, 500MHz |

| Die size | 3.87mm x 3.60mm |

| Packaging | WB-BGA (11mm x 11mm) |